Cinemachine 源码解析

Cinemachine 源码解析

TungLam核心概念和基础流程

核心概念

CinemachineCore:CinemachineCore 是一个全局单例,是管理所有 CinemachineBrain 和虚拟相机的数据结构 Manager,同时提供虚拟相机计算更新的接口和事件的分发等。

CinemachineBrain:CinemachineBrain 是用于驱动虚拟相机的更新计算的 Manager,会绑定一个实体相机(一般情况就是挂在 MainCamera 所在的 GameObject 上),并最终将当前激活的虚拟相机结果作用到实体相机上,同时还负责更新计算两个虚拟相机的 Blend 混合计算。

CinemachineVirtualCamera:CinemachineVirtualCamera 是通俗意义上的虚拟相机,相机的「配置文件」,根据「配置」指定相机该如何运动,其下组合了一组 Component Pipeline,分成Body,Aim,Noise 等几个管线阶段,每个阶段可以选择一种组件算法:

- Body:用来控制相机的位置移动

- Aim:用来控制相机的方向旋转

- Noise:用来添加相机的晃动计算

- Finalize:将以上结算结果叠加作用到实体相机

CinemachineExtension:在虚拟相机上层计算的过程中,在上层下发下来的时机回调中插入一些额外的计算,比如在 Component Pipeline 开始计算之前时机、Component Pipeline 每个 Stage 阶段计算结束时机等。

更详细的解释和使用见:Cinemachine Camera详细讲解和使用

基础流程

Cinemachine 的大体执行流程可以概括为如下:

下面以 CinemachineBrain.ManualUpdate 为入口,展开上述三个流程的代码逻辑

计算混合

计算混合的逻辑主要在 CinemachineBrain.ManualUpdate 中的 UpdateFrame0 和 ComputeCurrentBlend 两个函数中:

1 | public void ManualUpdate() |

在理解这两个函数的逻辑之前,先了解一些前置概念:

BrainFrame和mFrameStackBrainFrame存储CinemachineBrain在一帧内要处理的数据,由代码可知主要是混合相关的数据mFrameStack:存储BrainFrame的若干实例,基本只使用这个集合内的第一个元素进行处理(后面的元素应该是给 Timeline 准备的?),UpdateFrame0就是更新这个集合内的第一个元素

1 | class CinemachineBrain |

CinemachineBlend:存储混合计算的相关数据,比如用于混合的相机、混合的持续时间、混合的当前进度等。

1 | public class CinemachineBlend |

BlendSourceVirtualCamera:将CinemachineBlend对象包装为一个虚拟相机,用于在相机混合过程中作为一个中间结果。

1 | internal class BlendSourceVirtualCamera : ICinemachineCamera |

UpdateFrame0

首先调用

TopCameraFromPriorityQueue取出当前优先级最高的相机,作为当前帧的活跃相机activeCamera,并从mFrameStack中取出上一帧的活跃相机outGoingCamera,上一帧的活跃相机是mFrameStack[0].blend.CamB1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31ICinemachineCamera TopCameraFromPriorityQueue()

{

CinemachineCore core = CinemachineCore.Instance;

Camera outputCamera = OutputCamera;

int mask = outputCamera == null ? ~0 : outputCamera.cullingMask;

int numCameras = core.VirtualCameraCount;

for (int i = 0; i < numCameras; ++i)

{

var cam = core.GetVirtualCamera(i);

GameObject go = cam != null ? cam.gameObject : null;

if (go != null && (mask & (1 << go.layer)) != 0)

return cam;

}

return null;

}

private void UpdateFrame0(float deltaTime)

{

// Make sure there is a first stack frame

if (mFrameStack.Count == 0)

mFrameStack.Add(new BrainFrame());

// Update the in-game frame (frame 0)

BrainFrame frame = mFrameStack[0];

// Are we transitioning cameras?

var activeCamera = TopCameraFromPriorityQueue();

var outGoingCamera = frame.blend.CamB;

...

}要注意取出优先级最高的相机时,需要使用虚拟相机 Layer 来判断相机是否可用,如果

CinemachineBrain所绑定的实体相机的剔除遮罩(CullMask)包含了虚拟相机的 Layer,则该虚拟相机可用判断当前帧的活跃相机和上一帧的活跃相机是否互不相同,如果不相同则更新

mFrameStack[0]的数据,在结束的时候将当前帧的活跃相机赋值给mFrameStack[0].blend.CamB1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19private void UpdateFrame0(float deltaTime)

{

...

if (activeCamera != outGoingCamera)

{

// Do we need to create a game-play blend?

if ((UnityEngine.Object)activeCamera != null

&& (UnityEngine.Object)outGoingCamera != null && deltaTime >= 0)

{

...

}

// Set the current active camera

frame.blend.CamB = activeCamera;

}

...

}

开始更新

mFrameStack[0]的数据,主要是混合相关的逻辑1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52private void UpdateFrame0(float deltaTime)

{

...

if (activeCamera != outGoingCamera)

{

// Do we need to create a game-play blend?

if ((UnityEngine.Object)activeCamera != null

&& (UnityEngine.Object)outGoingCamera != null && deltaTime >= 0)

{

// Create a blend (curve will be null if a cut)

var blendDef = LookupBlend(outGoingCamera, activeCamera);

float blendDuration = blendDef.BlendTime;

float blendStartPosition = 0;

if (blendDef.BlendCurve != null && blendDuration > UnityVectorExtensions.Epsilon)

{

if (frame.blend.IsComplete)

frame.blend.CamA = outGoingCamera; // new blend

else

{

// Special case: if backing out of a blend-in-progress

// with the same blend in reverse, adjust the blend time

// to cancel out the progress made in the opposite direction

if ((frame.blend.CamA == activeCamera

|| (frame.blend.CamA as BlendSourceVirtualCamera)?.Blend.CamB == activeCamera)

&& frame.blend.CamB == outGoingCamera)

{

// How far have we blended? That is what we must undo

var progress = frame.blendStartPosition

+ (1 - frame.blendStartPosition) * frame.blend.TimeInBlend / frame.blend.Duration;

blendDuration *= progress;

blendStartPosition = 1 - progress;

}

// Chain to existing blend

frame.blend.CamA = new BlendSourceVirtualCamera(

new CinemachineBlend(

frame.blend.CamA, frame.blend.CamB,

frame.blend.BlendCurve, frame.blend.Duration,

frame.blend.TimeInBlend));

}

}

frame.blend.BlendCurve = blendDef.BlendCurve;

frame.blend.Duration = blendDuration;

frame.blend.TimeInBlend = 0;

frame.blendStartPosition = blendStartPosition;

}

// Set the current active camera

frame.blend.CamB = activeCamera;

}

...

}- 如果

mFrameStack[0]的混合已经计算完成,则将上一帧的活跃相机与当前帧的活跃相机之间的混合记录到mFrameStack[0]中 - 如果

mFrameStack[0]的混合还未计算完成,则使用BlendSourceVirtualCamera将尚未完成的混合存储成一个虚拟相机作为中间结果,赋值到mFrameStack[0].blend.CamA中成为混合的起始相机,意图是将当前帧的混合与上一帧的混合链接起来这里需要处理一种特殊情况,如果上一帧的混合还未完成,且上一帧的混合与当前帧的混合是相反的(即起始相机与目标相机调转了过来),则需要更新当前帧混合的相关数据来处理平滑过渡

比如上一帧的混合需要从 相机A 过渡到 相机B,持续时间为 C,且已经进行了 20%

则当前帧的混合需要从 相机B 过渡到 相机A,原本的持续时间为 D,D 需要更新为 **D * 20%**,且直接从混合的 80% 进度开始计算

可以理解成上一帧的混合直接取消掉,已经进行的混合计算被放到当前帧的混合里

- 如果

最后则是推进混合的进度

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19private void UpdateFrame0(float deltaTime)

{

...

// Advance the current blend (if any)

if (frame.blend.CamA != null)

{

frame.blend.TimeInBlend += (deltaTime >= 0) ? deltaTime : frame.blend.Duration;

if (frame.blend.IsComplete)

{

// No more blend

frame.blend.CamA = null;

frame.blend.BlendCurve = null;

frame.blend.Duration = 0;

frame.blend.TimeInBlend = 0;

}

}

}

ComputeCurrentBlend

ComputeCurrentBlend 的逻辑较为简单,主要就是将 mFrameStack.frame.blend 的属性赋值到 mFrameStack.frame.workingBlend 中,再赋值到 outputBlend 中输出,最终存储到 CinemachineBrain.mCurrentLiveCameras 中

1 | public void ManualUpdate() |

更新虚拟相机状态

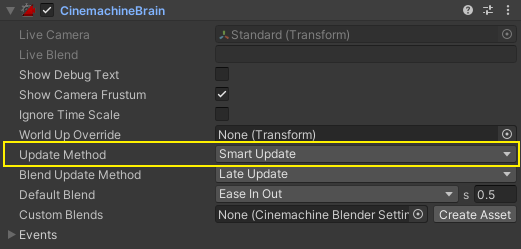

更新虚拟相机状态使用的函数为 CinemachineBrain.UpdateVirtualCameras,会根据 CinemachineBrain 的 Update Method 的值(如上图)从 CinemachineBrain.ManualUpdate (LateUpdate / SmartUpdate)或 CinemachineBrain.AfterPhysics(FixedUpdate / SmartUpdate)中调用

1 | public void ManualUpdate() |

可以看到当 Update Method 为 SmartUpdate 时都有一个 UpdateTracker.OnUpdate 的调用

UpdateVirtualCameras

1 | private void UpdateVirtualCameras(CinemachineCore.UpdateFilter updateFilter, float deltaTime) |

根据上述代码可知 UpdateVirtualCameras 函数的主要干了如下两件事情:

- 调用

CinemachineCore.Instance.UpdateAllActiveVirtualCameras// 更新待机相机和激活相机的状态CinemachineCore.Instance.UpdateVirtualCamera// 额外做了处理,避免一帧内多次更新同一个相机的状态CinemachineVirtualCameraBase.InternalUpdateCameraState// 实际更新相机状态的逻辑

- 调用

mCurrentLiveCameras.UpdateCameraState// 更新激活相机的状态CinemachineCore.Instance.UpdateVirtualCamera// 额外做了处理,避免一帧内多次更新同一个相机的状态CinemachineVirtualCameraBase.InternalUpdateCameraState// 实际更新相机状态的逻辑

可以看到两件事情都更新了激活相机的状态,所以在 CinemachineCore.Instance.UpdateVirtualCamera 中做了额外的处理,来避免一帧内多次更新同一个相机的状态

UpdateAllActiveVirtualCameras

每帧调用 UpdateAllActiveVirtualCameras 来对合适的虚拟相机进行状态更新,合适的虚拟相机包括:激活的虚拟相机、待机的虚拟相机

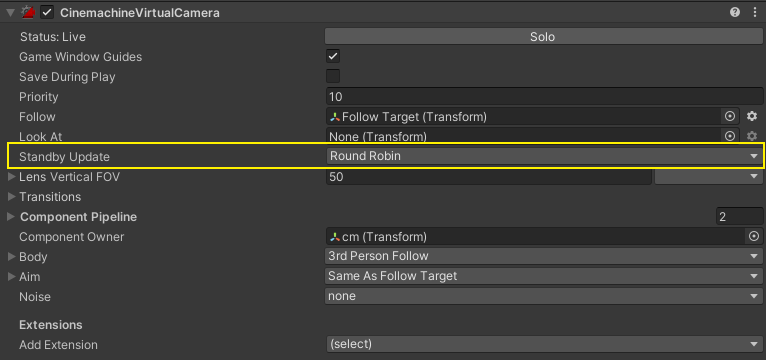

待机相机的更新时机由 CinemachineVirtualCamera 的 Standby Update 来决定处于待机状态下的更新时机

- Round Robin:轮询更新,CinemachineCore 每帧挑选一个待机的虚拟相机且Standby Update 为 Round Robin 进行更新

- Always:该虚拟相机处于待机状态时也会每帧更新状态

- Never:该虚拟相机处于待机状态时不更新状态

大致的流程如下: